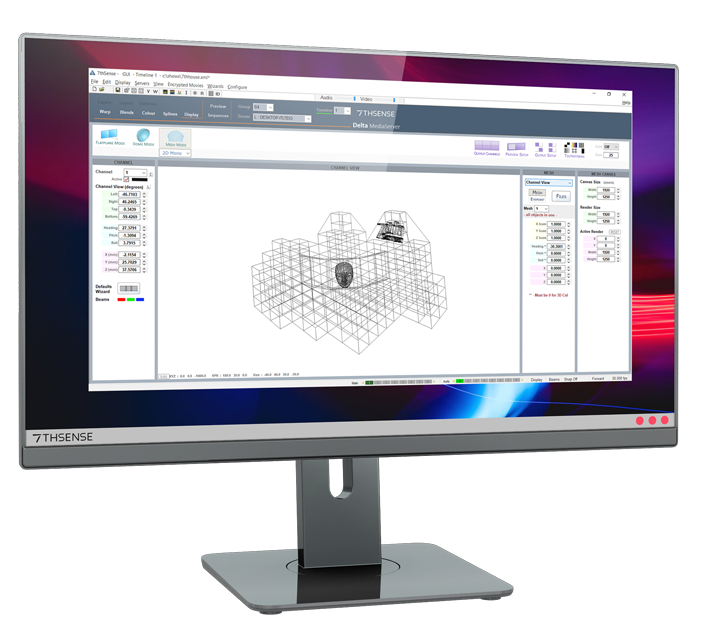

3D Auto-Cal is built into Delta Mesh Mode as a key alignment stage, helping minimise time required manually aligning projectors on-site.

In Mesh Mode measure each projector position, angle and lens characteristics and input them directly into Delta to build a 3D virtual set that matches the real 3D scene.

Overcome the tricky realities around onsite accuracy using

Delta’s fully adjustable, 6-point channel configuration. Calculate position

and lens characteristics using markers placed on your 3D model and then projected

onto your scene.

Achieve near perfect geometric alignment from 3D virtual models onto the real thing.

Using low latency and high precision camera-based tracking systems, Delta provides a tracked match between virtual and physical worlds by moving the corresponding 3D object in tandem with the physical model.

With Mesh Mode’s workflow, go beyond tracking incoming data directly. Invert, reassign and scale incoming data dynamically through the powerful Sequence Editing capability.

Delta is the ultimate server for large screen cinema, multi-channel digital planetariums, large theatres, flight simulators, and full-scale building projections.

Our knowledge has built the best display management tools available meaning Delta can perform with any display surface, from regular screens or weird and 3D objects.

Delta supports a range of 3D stereo modes including passive stereo, active stereo, anaglyph and chequerboard passive stereo - additional 3D support features include:

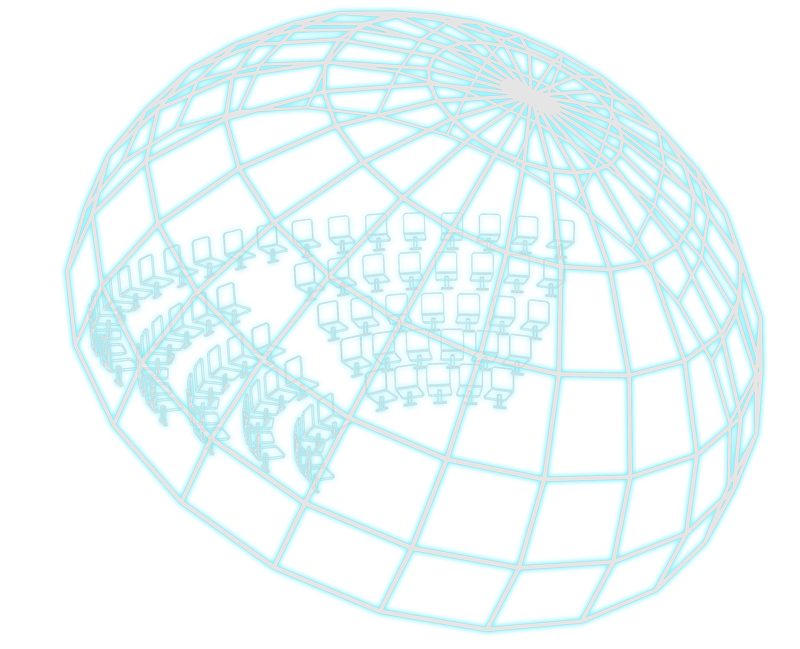

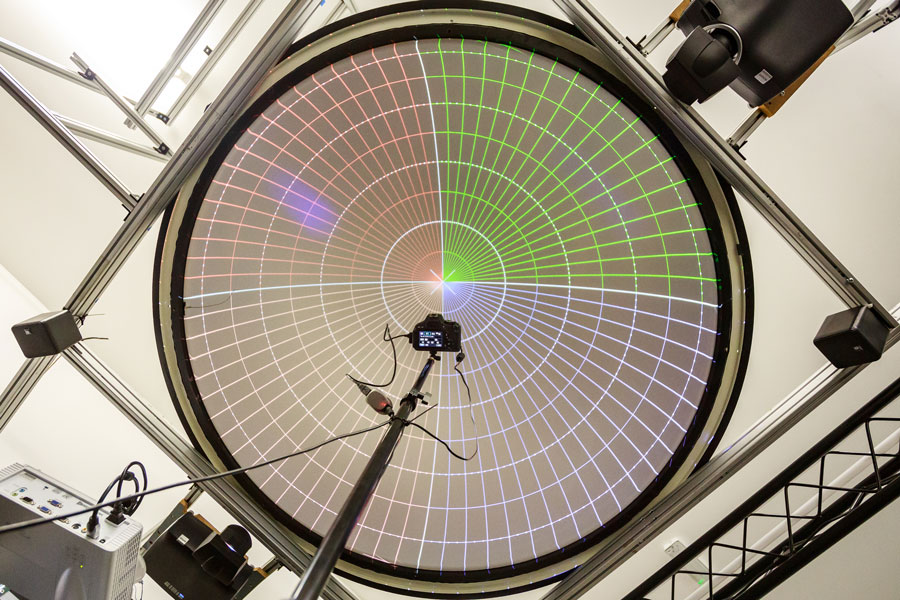

Dome Mode provides an exact view of the ‘real system’ dome surface for optimum alignment preparation. Making things easy for you and your team.

The manual dome alignment refinement can be managed with Delta’s built-in warp & blend controls, or automatically using any of a range of third-party auto-alignment systems. Delta supports fulldome fisheye masters (for example 4K x 4K), playing directly onto the dome in real-time with no requirement for offline carving. Top-end support of a mix of flat, panoramic or channel-mapped media which can be placed on-screen in intuitive angular canvas units. 3D dome media and displays are also supported.